Executive Summary

Your organization faces an invisible risk. While your employees boost productivity with ChatGPT and similar AI tools, they’re creating security holes you can’t see or control. New research reveals that 83% of organizations operate without basic technical controls to prevent data leaks through these integrations.

This guide provides a comprehensive roadmap for understanding and addressing the security and compliance risks of enterprise AI adoption. You’ll learn the technical mechanisms behind the exposure, understand your regulatory obligations, and discover how to implement controls that protect your data without stifling innovation.

AI Security Crisis

Hidden Reality Behind AI Adoption

69% of organizations view AI’s rapidly evolving ecosystem as their top security concern—ranking it higher than traditional threats like ransomware or data breaches

Security concerns have skyrocketed 60% in just six months among enterprise leaders

Privacy worries have exploded from 43% to 69% in just two quarters

64% of organizations worry about data integrity attacks where adversaries could inject bias or poison AI models

Introduction: AI Integration Explosion

Picture a typical Tuesday morning. Sarah, a financial analyst at your company, needs to create a quarterly presentation. She opens ChatGPT, clicks “Connect to OneDrive,” and within seconds has given an external system access to thousands of internal documents. No security review occurred. IT wasn’t notified. Your data governance policies didn’t trigger.

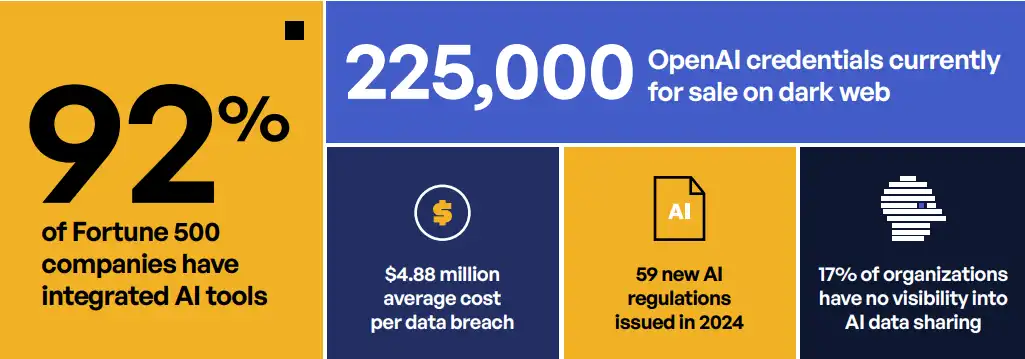

This scenario repeats thousands of times daily across enterprises worldwide. 92% of Fortune 500 companies have already integrated ChatGPT into their operations, processing over 1 billion queries daily. The promise is clear: unprecedented productivity gains, automated workflows, and competitive advantages.

But there’s a hidden cost. Traditional security measures—firewalls, endpoint protection, data loss prevention—were designed for a different era. They monitor network perimeters and scan for malware, but they can’t see when an employee copies sensitive text into a chat window or grants broad OAuth permissions to an AI service.

Deployment Acceleration Crisis

The surge in security anxiety directly correlates with massive acceleration in Al deployment. 90% of organizations have moved past experimentation, with 33% achieving full deployment of Al agents. This represents a quantum leap from previous quarters when deployment rates remained stuck at 11%.

This deployment acceleration has exposed a critical gap between controlled pilot programs and real-world implementation. The honeymoon phase is over, and organizations are discovering that the security challenges intensify exponentially as Al becomes embedded in core business processes.

90% of organizations have moved past experimentation, with 33% achieving full deployment of Al agents.

Technical Architecture of AI Integration Risk

Understanding OAuth Permissions

When employees connect AI tools to enterprise systems, they’re not just sharing a single document. They’re establishing persistent connections through OAuth 2.0 authentication flows that grant extensive, ongoing access.

Here’s what actually happens during a typical integration:

- Initial Authorization: Employee clicks “Connect to OneDrive”

- Permission Request: AI platform requests broad scopes:

- Files.Read.All – Read all files the user can access

- Files.ReadWrite.All – Modify any accessible file

- Sites.Read.All – Access SharePoint sites

- User.Read – Profile information

- Token Generation: Long-lived refresh tokens created

- Persistent Access: Connection remains active indefinitely

Critical Issue: These permissions often exceed what employees could access through normal channels. An AI integration might gain access to shared drives, team sites, and archived data that the employee rarely uses but technically has permission to view.

Technical Deep Dive: Token Persistence

OAuth refresh tokens for major platforms typically have these lifespans:

- Microsoft 365: 90 days (with sliding window renewal)

- Google Workspace: No expiration until revoked

- Box: 60 days

Each use can extend the window, creating effectively permanent access.

API Integration Architecture

Modern AI platforms connect through three primary mechanisms:

1. Direct Enterprise Integrations: Prebuilt connectors that grant extensive access

-

Microsoft Office 365/OneDrive:

- Access to all SharePoint sites

- Email archives through Exchange Online

- Teams chat history and files

- Calendar and contact information

- Drive files across all shared drives

- Gmail message content

- Google Docs editing history

- Calendar and meeting recordings

- All folders user can access

- Shared links and collaborations

- Version history and comments

- Admin-level permissions if user has them

- Customer records and contact lists

- Sales pipeline and forecasts

- Custom object data

- Connected app permissions

- Marketing automation data

- Customer interaction history

- Email templates and campaigns

- Integration with other marketing tools

Google Workspace:

Box Enterprise:

Salesforce:

HubSpot CRM:

The Permission Cascade Problem: When an employee with broad access connects ChatGPT to Office 365, AI gains access to:

- Every SharePoint site they can view (often thousands of documents)

- All OneDrive files including archived data

- Shared mailboxes and distribution lists

- Teams channels across the organization

Case in Point: Office 365 Integration Risks

A single “Connect to Microsoft” click grants these permissions:

- Files.Read.All – Every file in OneDrive and SharePoint

- Mail.Read – All email messages

- Calendars.Read – Meeting details and attendees

- Sites.Read.All – Every SharePoint site

- User.Read.All – Directory information

These permissions persist until manually revoked and refresh automatically.

2. Browser Extensions: JavaScript-based plugins that can:

- Read all webpage content

- Access browser storage

- Intercept form submissions

- Modify page content

3. Desktop/Mobile Apps: Native applications with:

- File system access

- Clipboard monitoring

- Screen capture capabilities

- Background synchronization

Each integration point multiplies the attack surface. A single compromised OAuth token can expose years of accumulated data across multiple platforms.

API Explosion Challenge

Adding to this complexity is the explosion of application programming interfaces (APIs). 34% of enterprises now use more than 500

APIs, with manufacturing companies seeing this figure rise to 50%. Each API represents a potential entry point for attackers, creating

an attack surface that’s growing faster than most security teams can monitor or protect.

Data Flow Analysis

Understanding how data moves from your enterprise to AI systems reveals why traditional controls fail:

Once data enters the AI ecosystem, it undergoes several transformations:

- Ingestion: Raw data processed and indexed

- Embedding: Converted to numerical representations

- Training: Incorporated into model updates

- Inference: Can influence future outputs

The permanence problem cannot be overstated: Unlike traditional data breaches where you might revoke access or delete exposed files, information absorbed into AI models becomes part of their fundamental operation.

Security Risk Deep Dive

1. Credential Exposure Crisis

Over 225,000 OpenAI credentials are currently available for purchase on dark web marketplaces. These aren’t from a single breach—they’re harvested continuously through information-stealing malware.

The attack chain typically follows this pattern:

- Initial Infection: Employee downloads malware (often through malicious ads or email attachments)

- Credential Harvesting: Malware extracts stored passwords from browsers

- Underground Markets: Credentials packaged and sold in bulk

- Account Takeover: Purchasers access AI accounts and connected systems

The median remediation time stretches to 94 days—over three months where attackers can freely access your data through compromised AI accounts.

2. Data Integrity Attack Vector

Unlike traditional data breaches that might expose customer records, 64% of organizations worry about data integrity attacks, where adversaries could inject bias or poison AI models with incorrect information. A successful AI integrity attack could corrupt decision-making processes across an entire organization.

Trust becomes another critical issue. 57% of companies question the trustworthiness of AI systems, particularly when these systems make autonomous decisions based on sensitive data. This isn’t just a technical problem—it’s a business risk that could undermine customer confidence and regulatory compliance.

Case Study: The Samsung Wake-Up Call

In 2023, Samsung semiconductor engineers used ChatGPT to optimize proprietary source code. Within weeks, sensitive semiconductor design information and internal meeting notes were exposed. The incident led Samsung to ban ChatGPT use entirely, but the damage was permanent—their intellectual property had already been absorbed into the AI’s training data.

3. Shadow AI: The Invisible Threat

72% of employees access AI tools through personal accounts rather than corporateapproved channels. This “Shadow AI” phenomenon creates blind spots that dwarf traditional Shadow IT concerns.

Consider the scope:

- Personal ChatGPT, Claude, and other AI accounts used on work computers

- Browser extensions that process all page content

- Mobile apps accessing corporate email

- API integrations through personal developer accounts

86% of organizations admit they cannot see these AI data flows. Your data loss prevention tools, SIEM systems, and access logs show nothing when an employee copies a customer list into an AI web interface.

4. Human Oversight Reality Check

Despite AI’s promise of automation, 45% of leaders now require human-in-the-loop oversight for AI systems—a significant increase from just 28% in the previous quarter. This suggests that as organizations gain real-world experience with AI, they’re discovering that autonomous systems create unacceptable risk levels for sensitive business operations.

45% of leaders now require human-in-the-loop oversight for AI systems—a significant increase from just 28% in the previous quarter.

5. Quantifying the Damage

The financial impact extends far beyond immediate breach costs:

- Direct Costs: Average data breach now reaches $4.88 million

- Competitive Loss: Trade secrets and strategic plans exposed to competitors

- Regulatory Fines: GDPR penalties up to €20 million or 4% of global revenue

- Reputation Damage: Customer trust erosion lasting years

- Legal Liability: Shareholder lawsuits and partner contract violations

6. Fragmentation Problem

The complexity is compounded by fragmented tooling. Organizations are using an average of five or more tools for data discovery and classification, and 57% use five or more encryption key managers. This fragmentation creates gaps in policy enforcement and increases the risk of misconfigurations that could expose sensitive AI training data.

Organizations are using an average of five or more tools for data discovery and classification, and 57% use five or more encryption key managers.

Compliance Risk Analysis

1. Regulatory Avalanche

The scale of regulatory change is staggering. Nearly 700 AI-related bills were introduced across 45 states in 2024—a 266% increase from 2023. At the federal level, Congress doubled its AI legislation activity. This isn’t gradual evolution; it’s a regulatory explosion that caught most organizations unprepared.

U.S. agencies issued 59 new AI regulations in 2024 alone, with the White House M-24-10 memorandum requiring all federal agencies to establish AI Governance Boards by December 1, 2024. The patchwork nature of these regulations creates a compliance minefield where a single AI integration might violate multiple overlapping requirements.

2. Compliance Failure Reality

Despite years of regulatory focus, 45% of organizations failed recent compliance audits. More alarmingly, there’s a stark correlation between compliance failures and security breaches: 78% of companies that failed audits also had a history of data breaches, compared to just 21% of those that passed all compliance requirements.

3. Quantum Threat Multiplier

As if AI security challenges weren’t enough, organizations must also prepare for quantum computing threats that could render current encryption methods obsolete. 63% of companies fear that quantum computers will compromise future encryption, while 61% worry about vulnerabilities in key distribution systems.

The “harvest now, decrypt later” threat is particularly concerning for AI applications. 58% of organizations recognize this risk—the possibility that encrypted data stolen today could be decrypted by future quantum computers. For AI systems that rely on historical training data, this creates a compound vulnerability where today’s data security decisions could have consequences decades into the future.

Despite these risks, only 57% of organizations are actively evaluating post-quantum cryptography solutions, and just 33% are relying on cloud or telecommunications providers to manage this transition.

U.S. agencies issued 59 new AI regulations in 2024 alone.

4. GDPR and the OpenAI Precedent

Italy’s €15 million fine against OpenAI established critical precedents that apply to every organization using AI tools:

Primary Violations That Apply to Your Organization:

- Processing personal data without adequate legal basis: When employees paste customer data into ChatGPT or another AI platform, you’re processing that data without consent

- Breach of transparency principles: Your privacy notices likely don’t mention AI tool usage

- Failure to inform users: Customers don’t know their data trains AI models

- Inadequate access controls: No age verification or user restrictions

The Italian authority noted OpenAI’s “cooperative stance” when calculating the fine—suggesting penalties could have been higher. For organizations without OpenAI’s resources to mount a defense, the risk multiplies.

Critical Issue: Research indicates companies could face fines up to 11% of global revenue when both EU AI Act (7%) and GDPR (4%) violations are combined. The EU AI Act, effective August 1, 2024, adds another layer with its risk-based approach categorizing AI

systems into four levels.

5. Compliance Reality Check

The OpenAI fine demonstrates that using AI tools makes you a data processor under GDPR. Every time an employee shares EU citizen data with ChatGPT or another AI platform, you risk:

- Article 5 violations (lawfulness and transparency)

- Article 30 violations (record keeping)

- Article 32 violations (security of processing)

- Article 35 violations (impact assessments)

6. Audit Trail Crisis: Why You Can’t Prove Compliance

Traditional logging systems create a false sense of security. Your SIEM might show an employee accessed a file at 2:47 p.m., but it can’t show they copied its contents to ChatGPT at 2:48 p.m. This creates what researchers call “black box” compliance problems.

Critical Audit Gaps:

- No record of data shared with AI: Copy-paste actions invisible

- Can’t track AI platform usage: Personal accounts bypass corporate logging

- Lost data lineage: Information flow becomes untraceable

- Metadata extraction failures: Complex integrations hide data movement

A 2024 European Union Agency for Cybersecurity study found 56% of organizations can’t track AI integrations across their technology stack. When regulators request proof of proper data handling, organizations face an impossible task: proving a negative about systems they don’t control.

7. Regulatory Pressure Surge

The regulatory landscape pressure is intensifying rapidly. Regulatory concerns have climbed from 42% to 55% among business leaders in just two quarters. This surge reflects the reality that organizations are encountering real-world compliance challenges as AI moves from experimentation to production deployment.

8. Enhanced Compliance Requirements

The NIST AI Risk Management Framework now requires organizations to implement four core functions: GOVERN, MAP, MEASURE, and MANAGE. Combined with industry-specific requirements, organizations must maintain:

56% of organizations can’t track AI integrations across their technology stack.

Technical Compliance Infrastructure:

- Model-specific metrics (latency, accuracy, usage)

- Real-time anomaly detection

- Complete data lineage documentation

- Bias detection and mitigation processes

- Role-based access control for all AI systems

- Encryption for all AI data transfers

- PII anonymization in logs

Governance Requirements:

- Senior-level AI Governance Boards

- Documented risk assessments for all AI uses

- Declaration of Conformity for high-risk systems

- Post-market monitoring systems

- Regular third-party audits

Industry-Specific Additions:

- Healthcare: BAA equivalents for AI tools, patient consent workflows

- Financial: SOX control documentation, trading surveillance integration

- Government: FedRAMP compliance, security clearance considerations

For a billion-dollar company, one AI breach/violation can translate into hundreds of millions of dollars.

9. Cumulative Risk Reality

Organizations face a perfect storm of compliance risk:

- Multiple overlapping regulations (GDPR + AI Act + industry-specific)

- Retroactive liability for past AI usage

- Strict liability standards (intent doesn’t matter)

- Precedent-setting enforcement encouraging more actions

- Private right of action in some jurisdictions

With enforcement accelerating and penalties stacking, a single employee’s ChatGPT, Claude, or other AI usage could trigger violations of GDPR (4% of revenue), EU AI Act (7% of revenue), plus industry-specific penalties. For a billion-dollar company, that’s potentially $110 million from one incident.

A single employee’s use of AI could trigger violations of GDPR (4% of revenue), EU AI Act (7% of revenue), plus industry-specific penalties.

Current Control Failures

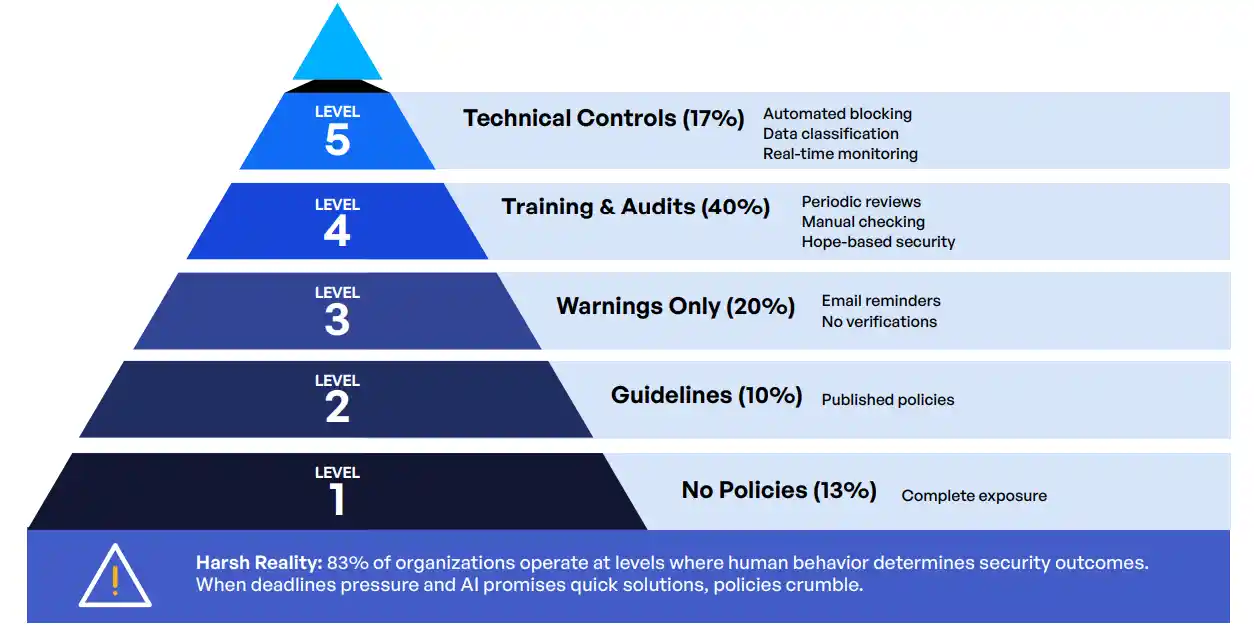

Security Control Pyramid

Kiteworks research identifies five levels of AI security maturity:

Technical Control Reality

Perhaps most concerning is the speed mismatch between AI deployment and security readiness. 73% are investing in AI-specific security tools as an afterthought rather than building security into their AI initiatives from the ground up.

The 3x overconfidence gap makes this worse—33% of executives believe they have comprehensive AI governance, but only 9% actually do.

Visibility Crisis

The research reveals a stark reality about organizational visibility. Nearly 24% of organizations have little to no confidence in identifying where their data is stored—a critical blind spot when AI systems need access to sensitive information across multiple environments.

Path Forward: Private Data Networks With AI Data Gateways

Organizations need a fundamental shift in how they approach AI security. The answer isn’t to ban AI tools—that ship has sailed. Instead, companies must create secure channels for AI integration while maintaining control over their data.

Strategic Response Reality

Forward-thinking organizations are responding to these challenges with fundamental changes to their AI strategies. Rather than retreating from AI adoption, they’re implementing more sophisticated governance frameworks that allow them to capture AI’s benefits while managing its risks.

The shift toward hybrid AI development represents one key adaptation. Over 50% of organizations now plan to deploy a combination of pre-built and internally built AI solutions, up dramatically from 27% in the previous quarter. This hybrid approach allows organizations to leverage proven external AI capabilities while maintaining greater control over sensitive data and proprietary processes.

Leadership structures are also evolving to address these challenges. Chief Information Officers now lead 87% of AI initiatives, reflecting recognition that AI implementation requires sophisticated technical security expertise rather than just strategic vision. This shift from CEO and Chief Innovation Officer leadership to CIO oversight signals that forward-thinking organizations view AI as a core infrastructure challenge that requires specialized data protection knowledge.

Forward-thinking organizations view AI as a core infrastructure challenge that requires specialized data protection knowledge.

Understanding the Architecture

When we talk about keeping data “within your control,” we need to be clear about what this means technically. There are two primary architectural approaches to prevent data leakage to public AI systems:

Architecture Option 1: RAG (Retrieval-Augmented Generation)

How it works: Your organization creates a proprietary repository of documents that remains separate from the public LLM. When users query the system:

- The query goes to your controlled interface (not directly to your AI tool)

- Your system retrieves relevant documents from your secure repository

- These documents augment the query with enterprise-specific information

- The LLM processes the augmented query and returns results

- Importantly: The LLM does not permanently retain your data after processing

Critical Privacy Consideration: While LLMs don’t learn from RAG context permanently, privacy risks remain during processing. The

LLM provider may log or cache data, and there’s risk of accidental memorization, especially if the model is later fine-tuned.

Domain-Specific Language Models (DSLMs): When embedded in customer-facing products, these are called Domain-Specific Language Models (DSLMs). This approach provides complete data isolation but requires significant technical resources.

Architecture Option 2: Private LLM Instance

How it works: Your organization deploys and controls its own LLM instance:

- Deploy an open-source model (like Llama) or build a custom model

- Fine-tune it using your proprietary data and domain knowledge

- Host it entirely within your infrastructure

- All processing happens on your servers with your security controls

- Zero data exposure to external parties

Kiteworks Approach: Secure AI Data Gateway With RAG

Kiteworks Private Data Network implements a secure RAG architecture that addresses the limitations of both public AI access and basic RAG implementations:

1. Data stays within your control—here’s how:

- Your sensitive data never leaves your security perimeter in unencrypted form

- The Kiteworks AI Data Gateway acts as an intelligent intermediary

- Users interact with a controlled interface instead of public ChatGPT, Claude, or other AI tool

- Data is encrypted end-to-end and only decrypted within your controlled environment

- The system retrieves and processes your documents without exposing them to public LLMs

2. Access is managed centrally

Comprehensive role-based and attribute-based access controls (aligned to NIST CSF) provide granular visibility and management of all AI interactions with your data. The gateway ensures:

- Users can only query data they’re authorized to access

- All interactions are logged and auditable

- No direct access to public LLMs—everything flows through your controls

- Real-time monitoring of what data is being queried and by whom

3. Compliance is built-in

Immutable audit logs automatically track every data interaction, providing irrefutable evidence of proper data handling across multiple regulatory frameworks including HIPAA, GDPR, and FedRAMP. This includes:

- Complete query history with user attribution

- Data classification and handling records

- Automated compliance reporting

- Privacy-preserving techniques like data masking when needed

4. Integration is secure

The platform implements zero-trust architecture for all AI connections, with unified security controls that integrate with your existing infrastructure:

- No direct employee access to public AI tools

- Secure connectors to approved enterprise systems

- Controlled data retrieval and processing

- Option to migrate to private LLM instances as needed

Key Technical Safeguards

Think of it as creating your own secure highway for AI traffic, with comprehensive governance capabilities that protect sensitive data while enabling innovation. Critical protections include:

- Data masking and tokenization before any external processing

- Secure enclaves for sensitive data processing

- Ephemeral processing with no permanent retention by AI models

- Strong guarantees about data handling, storage, and deletion

- Privacy-preserving techniques for regulated data (PII, PHI, etc.)

Implementation Architecture

A private data network for AI should include:

Data Classification Engine: Automatically identifies and tags sensitive information before it can be accessed by AI queries

Secure Repository: Your proprietary documents stored with encryption, access controls, and version management

AI Data Gateway Interface: The controlled access point where users submit queries—replacing direct AI access

Query Processing Layer: Retrieves relevant documents, applies security policies, masks sensitive data, and augments queries

Monitoring and Analytics: Real-time dashboards showing AI usage, data access patterns, and risk indicators

Compliance Automation: Built-in reports for regulatory requirements, audit trails, and certification support

The Bottom Line

Whether you choose RAG with secure gateways or private LLM instances, the critical requirement remains the same: Your users must access AI capabilities through your controlled interfaces, not directly through public AI tools. This is the only way to maintain security, ensure compliance, and prevent the data hemorrhaging documented throughout this guide.

Conclusion: The Choice Before You

Every day your organization delays implementing proper AI controls, thousands of data points leak into systems you don’t control. Your intellectual property trains models that competitors might access. Your compliance violations compound. Your breach risk multiplies.

But organizations that act now—implementing private data networks and AI governance—will thrive in the AI era. They’ll harness productivity gains while maintaining security. They’ll satisfy regulators while enabling innovation. They’ll protect their data while embracing the future.

Urgency Factor

The time for incremental security improvements has passed. As AI reshapes how businesses operate and quantum computing threatens traditional encryption, organizations need security architectures that can evolve as quickly as the threats they face. The 69% of companies that fear AI’s rapid changes aren’t being paranoid—they’re being realistic about the challenges ahead.

The technology exists. The frameworks are proven. The only question is whether your organization will lead or explain to stakeholders why it didn’t.

69% of companies that fear AI’s rapid changes aren’t being paranoid—they’re being realistic about the challenges ahead.

Appendix A: Technical Specifications

Minimum Requirements for AI Data Gateways:

- TLS 1.3 encryption for all connections

- SAML 2.0/OAuth 2.0 authentication

- Real-time DLP scanning

- API rate limiting and anomaly detection

- Immutable audit logging

- Multi-region deployment options

Appendix B: Regulatory Quick Reference

| Regulation | Key AI Requirements | Penalties |

|---|---|---|

| GDPR | Lawful basis, transparency, data minimization | Up to €20M or 4% revenue |

| HIPAA | Access controls, audit logs, minimum necessary | $50K-$1.5M per violation |

| SOX | Internal controls, data integrity | Criminal penalties possible |

| CCPA | Disclosure, deletion rights | $2,500-$7,500 per violation |

| EU AI Act | Risk assessments, human oversight | Up to €35M or 7% revenue |

Glossary

OAuth: Open standard for authorization allowing third-party access.

Shadow AI: Unauthorized use of AI tools outside IT oversight

Private Data Network: Isolated environment for secure data processing

AI Data Gateway: Control point between enterprise data and AI services

Prompt Injection: Attack method manipulating AI through crafted inputs